The Comprehensive Guide to Prompt Engineering for Gen AI [2025]

4 min read | By Admin | 09 May 2025 |

Prompt engineering is the key to utilizing large language models (LLMs) effectively and efficiently. Ever since generative AI gained its popularity across the world, the need for a new discipline of creating prompts arose. With the wide use of LLMs across use cases, the need for crafting the right prompts is what will extract the best LLM capabilities and its performance.

AI developers and prompt engineers constantly train LLMs for diverse scenarios, thus shaping them to be easily understood and make conversations in response to natural language inputs. It is a rather iterative process and LLMs need to be continually trained in order to get the most relevant and expected outputs for different styles of prompts and word choices.

This blog is your comprehensive prompt engineering guide that clubs some of the most effective tips for gen AI users and LLM engineers in 2025.

What is AI Prompting?

AI prompting is the process of giving a curated input, question, or a situation to generative AI tools like Chatgpt, Claude, Gemini, etc, to extract the desired output in the form of answers, code, image generation, and more. The basic logic here is that the more effective the prompt is, the most accurate the responses are. In short, meaningful outputs are received when meaningful inputs are given. A good prompt engineering technique helps clearly define what the scenario is, what kind of response is expected to be generated, and with proper context, reasoning, etc.

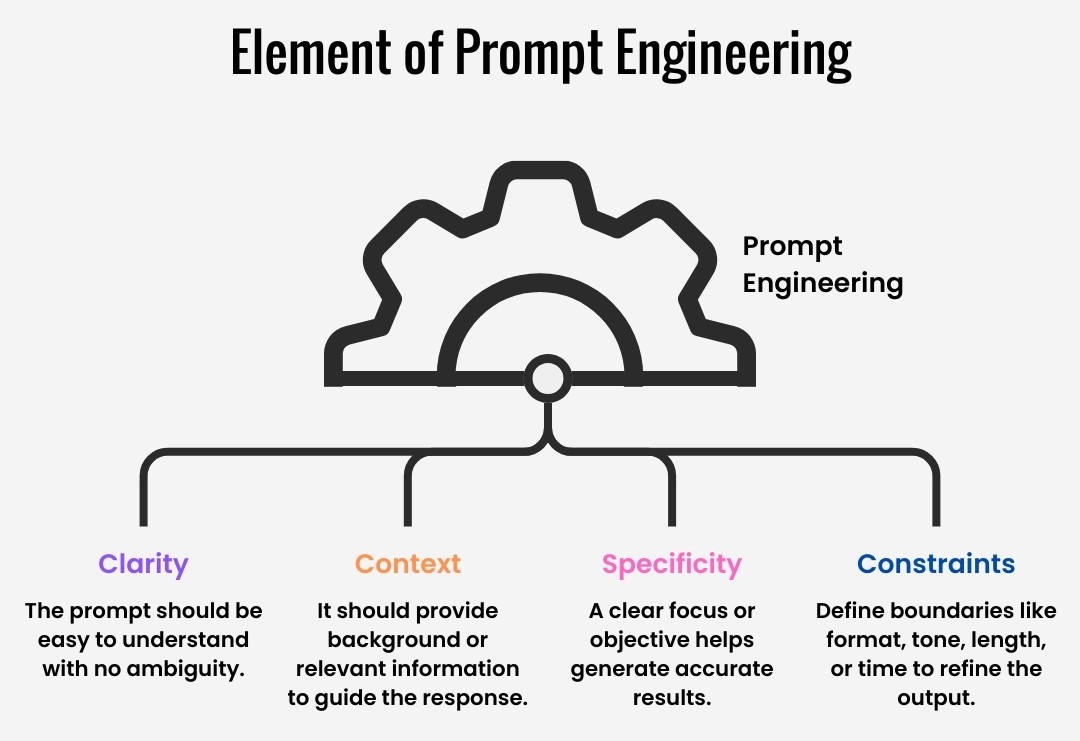

Prompt Elements

It is crucial to understand the key elements that will ease prompting large language models.

Instruction:

This refers to the type of input given—question, or other commands. This is the most critical element in prompt engineering.

Context:

Describe the scenario or situation you want the machine to understand before you put out a question.

Input Data:

References of other sources of information the LLM should process for generating a response.

Output Format:

Specify how you want the output structured—e.g., list, paragraph, table, or JSON.

Output Length:

Use token limits to manage how long the model’s response should be. Shorter limits yield brief answers; higher limits allow for deeper detail.

Top-k Sampling:

A lower value (like k=10) keeps output more focused; a higher value adds creativity.

Top-p Sampling (Nucleus Sampling):

A top-p value of 0.9 means the model picks from the smallest set of words whose combined probability is 90%, balancing coherence and diversity.

Sounds Interesting?

Looking for a detailed prompt engineering tutorial?

Read Google’s Whitepaper on Prompt Engineering (Source: Kaggle.com)

Must-know Prompt Engineering Tips & Best Practices

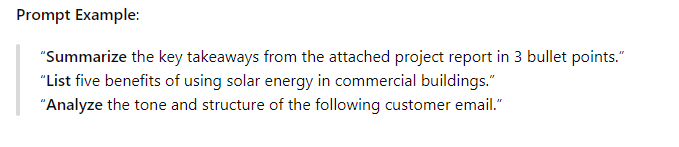

1. Choose the right command

The right command makes all the difference. Use hook words that precisely describe what you are trying to say and the response you expect. Words like “Explain, Summarize, Analyze, Answer, List, etc” are certain examples that will give you accurate responses in the format or order you require.

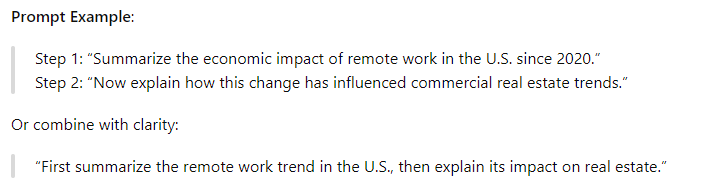

2. Split up complex questions

Not all questions are simple. LLMs are trained in such a way that it even understands complex queries. However, designing a complex prompt in a step-by-step fashion or splitting up facts and queries will enable the machine to generate better responses.

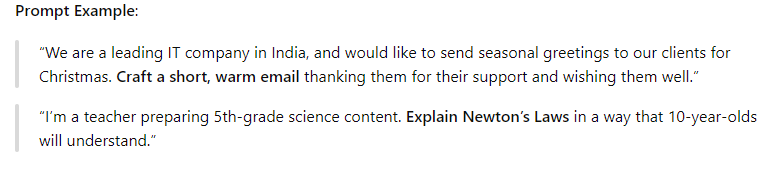

3. Use Contextual text

Describe the situation. Phrases like, “We are a leading IT company in India, and would like to send seasonal greetings to our clients for Christmas. Craft a short email for the same”. This describes the scenario in which an email is needed.

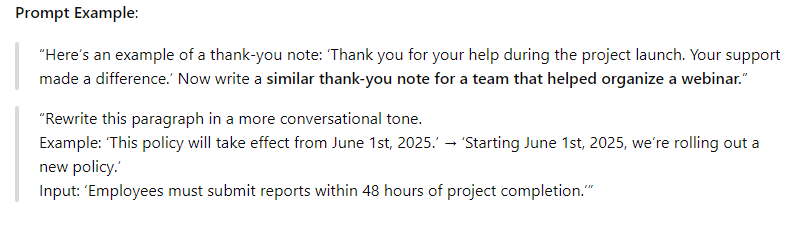

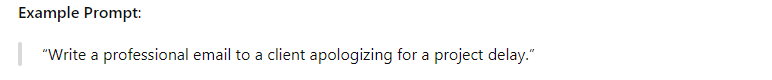

4. Provide examples

There are situations where there are no direct answers to a question. Give references or samples that better input the LLM on what the instruction is and what time of response would be most relevant.

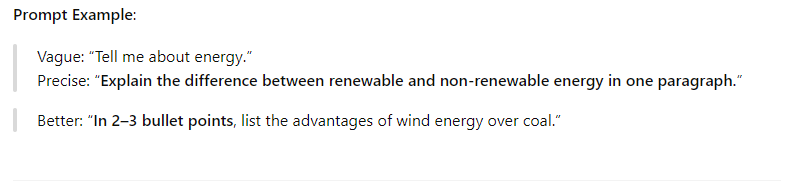

5. Be precise

One of the most key prompt engineering tips, but often overlooked is precision and specificity. There are chances that sometimes, too much explanation can confuse the LLM. Rather be simple and direct for questions that don’t require lengthy instructions.

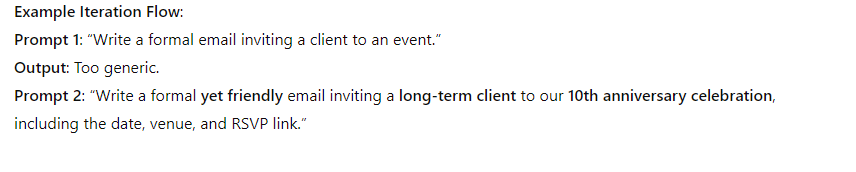

6. Keep Iterating

The best capabilities of an LLM can be extracted only on iteration. It is how well you tweak and respond to its response that will help shape the answer you require.

Different Prompting Techniques

Here are the different prompting techniques one can use for diverse scenarios.

General Prompting / Zero-shot

Zero-shot is a simple and direct prompting style. Prompts of this type usually have no references or examples. This is a first step to start with for beginners.

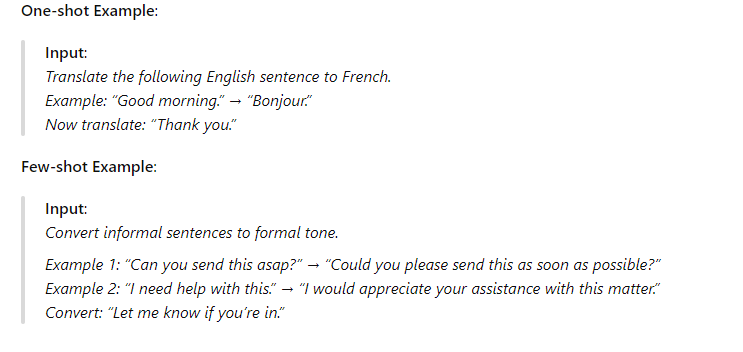

One-shot & Few-shot

This refers to the user inputting one or more examples and references for the type of response expected. One-shot involves one example and a few-shot prompt is about giving 3-5 examples in the instruction.

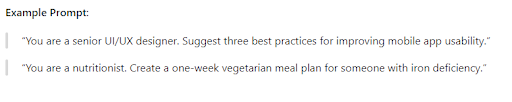

Role Prompting

This works by assigning a specific role or persona to the LLM to craft a specific response.

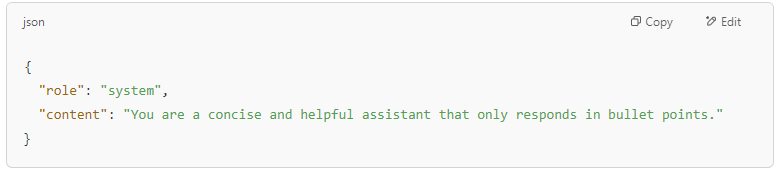

System Prompting

System prompting is a type of setting where overarching instructions are given to define the LLM’s behavior in a particular session.

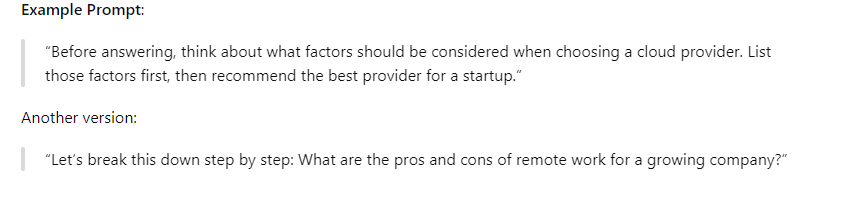

Step-back Prompting

Step-back prompting is majorly used in scenarios where reasoning is required. It instructs the LLM to step back and think before generating an answer with appropriate reasoning. Usually used in complex tasks or tasks that depend on logic.

Prompt Engineering for LLMs (Example Prompts)

The benefits of prompt engineering are plenty across different use cases and here are a few examples that illustrate the same.

| Use Case | Prompt Example |

|---|---|

| Email Writing | “You are a professional HR manager. Draft a welcome email to a new employee joining the marketing team.” |

| Coding | “You are a Python expert. Write a function to validate an email address using regex.” |

| Data Analysis | “Summarize the following data and explain the key takeaways” |

| Customer Support | “You are a responsive chatbot for an e-commerce store. Handle a complaint about a delayed delivery.” |

| Summarization | “Summarize the following article into a 3-sentence executive summary, highlighting only the most important facts.” |

| Math Problem Solving | “Solve this problem step by step: A train travels 120 km in 2 hours. What is its average speed in km/h?” |

| Image Generation | “Create an image of a smart city with sustainable energy.” |

How to Optimize Prompts?

Best prompts are the ones optimized. With better optimization and tweaks, the more accurate responses are received. To master prompt engineering for generative AI, refine your inputs with these strategies:

● Try and compare various prompt formats.

● For repetitive tasks, Use prompt templates.

● Fine-tune with temperature and max_tokens configurations.

● Chain prompts for sequential reasoning.

● Surround user-input with delimiters (or tokens) from the instructions.

● Prompt Engineering Applications.

The Future of Prompt Engineering

As AI becomes embedded in more products, workflows, and industries, the need for precision in interacting with large language models will continue to rise. Prompt engineering in 2025 isn’t just a developer’s tool anymore—it’s becoming a critical skill for marketers, product managers, researchers, and customer support teams alike.

According to recent market research,

The global prompt engineering market size is estimated at USD 222.1 million in 2023 and is projected to grow at a (CAGR) of 32.8% from 2024 to 2030.

This explosive growth is driven by the rapid adoption of generative AI tools and the rising demand for better AI-human communication.

With this momentum, the future of prompt engineering is both promising and essential. Those who invest in mastering prompt engineering tips, stay updated with evolving techniques, and understand the logic behind AI behavior will lead the next wave of innovation.

The latest from our editors

Join over 150,000+ subscribers who get our best digital insights, strategies and tips delivered straight to their inbox.