ChatGPT

ChatGPT

Grok

Grok

Perplexity AI

Perplexity AI

4 min read | By Admin | 25 August 2025 |

How can we make AI development and application for ethical purposes when it becomes increasingly embedded into our lives? Now, AI does influence decision-making with real-world consequences from healthcare and finance to education. Although this growing reliance on AI points to the importance of ethics, this blog explores what responsible AI software means, how ethical AI matters, and ways in which organizations can work on ethical development so that it may enable trustworthy AI, fair, and accountable outcomes.

Source: Ibm

Responsible AI refers to designing, developing, and deploying an AI system focusing on fair treatment, AI transparency, accountability, and AI safety to its end users. It instills the thought that AI serves humanity and works for the uplift of Human rights in AI while doing minimum harm to anyone. This includes attention to how AI interacts on a societal level and transplanting an ethical perspective in all phases of the software life cycle.

Ethical AI is essential as ill-intentioned AI systems have the potential to amplify and further extrapolate the already existing AI impact on society, infringe on privacy, and cause inadvertent harm. The need for AI ethics is founded on the premise that the technologies should work for all and not just some. The instances when AI has been created without well-defined ethical principles are marred by discrimination, exclusion, misinformation, and loss of trust of people.

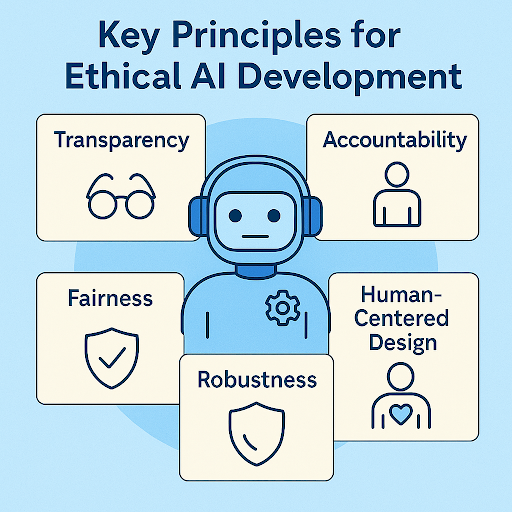

As all AI progresses into what can rightly be considered a revolution in business and everyday life, strong ethics must be its generation. Responsibility with AI does not purely touch the technical advancement; it rather touches fairness, accountability, and human welfare. The types of principles form the core of guiding ethical AI development:

Transparency means giving in front to public any information related to the way the AI systems are tested, what data is used, and how the decisions are made. Transparent AI systems, supported by Explainable AI (XAI) techniques, are generally preferred as they are simpler to audit, explain, and improve.

Accountability ensures that when AI decisions are incorrect, errors are corrected and improvements are made. Mechanisms of AI accountability can be audits, feedback loops, and governance boards.

These include enforcing the use of AI bias-free data and testing for disparate impacts, with continuous outcome monitoring to verify that the AI systems are not discriminatory in practice. Such AI systems need to be robust and resilient to attacks, the design of which should minimize technical safeguards with human oversight.

Keeping human values as the focus in developing AI ensures that systems assist in making decisions for humans and not replace or work against them.

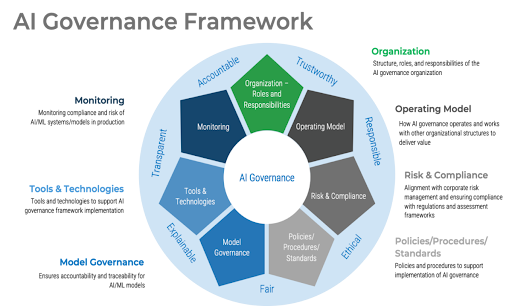

AI development should follow sound governance frameworks to guarantee its ethical conduct. These systems act as accountable agencies that issue binding policies, roles, and procedures controlling areas such as design, development, and deployment of the AI systems. Common elements of an effective AI governance framework would be the following:

Define standards, legal requirements, ethical guidelines all that developers or stakeholders must adhere to throughout the AI lifecycle.

Provisions for regular checks for prejudices or any threats that may emanate from their safe operation, misuse, or unforeseen side effects of AI, along with relevant mechanisms to avoid them in good time.

Appoint a person to oversee all the aspects of AI governance: technical, ethical, legal, and business.

Creating an audit plan and a third-party audit to review AI conduct, regulatory compliance, and performance against the prescribed set of ethical goals.

Ensuring that decision-making procedures, data sources, and observations regarding model behaviors are documented, making them available for further examination and potential changes.

Bluelupin

Bluelupin

The arrival of AI is transforming industries, speeding up innovation, and infrastructure decision-making across different sectors. With the rapid development comes the ever-mounting question. Will AI act ethically? Should they drift away from ethics, unintended consequences may follow. Amplifying bias in AI, unjustified secrecy, and erosion of overall trust. Here are some best practices with which ethical AI should be developed and implemented.

Integrate ethical reviews and risk assessments from the design phase.

Bring together interdisciplinary teams of ethicists, domain experts, and common users to identify the potential ethical blind spots.

It is essential to put AI regulations systems under rotation of tests for sources of bias, AI fairness, and explainability.

User feedback should be gathered and acted upon to build transparency and trust.

Developers, leaders, and users should be made aware of AI ethics and how responsible behavior should manifest.

The ongoing AI evolution should ideally draw the responsibility to more responsible building out of picture. Navigating the ethical landscape needs more than mere technical know-how; deep knowledge of AI ethics and commitment to fairness and accountability, punctuated by flexibility in the face of new challenges, are required. At present, good governance of AI, explainability, and design with human values in mind should be promoted for AI to become a force of good. The future of AI depends not just on what we build but, rather, on how responsibly we build it.

To make AI ethical, organizations need to incorporate ethical principles at the design stage and involve diverse teams while performing ongoing tests for fairness and bias, and gathering user feedback about responsible AI practices.

AI biases emerge from structured decision errors that develop through biased training data, together with flawed algorithms and inadequate development diversity, which produce unfair and discriminatory outcomes.

AI governance requires organizations to establish formal policies together with risk management procedures and defined responsibility structures, and maintain transparency through regular audits to achieve ethical compliance and accountability.

Developing unbiased AI requires collecting datasets that represent diverse populations while conducting continuous bias assessments and bringing multiple fields of expertise to the project, and implementing fairness constraints during model training.

Explainable AI (XAI) represents artificial intelligence systems that create understandable explanations of their decision-making mechanisms to human users, who gain increased trust and accountability.

Join over 150,000+ subscribers who get our best digital insights, strategies and tips delivered straight to their inbox.